Artificial Intelligence (AI) is revolutionizing how we work, defend, and innovate. But while the spotlight shines on breakthroughs in productivity and automation, cybersecurity professionals are quietly sounding the alarm: AI isn’t just transforming defense. It’s becoming a target and a liability.

You’re likely already running AI-enhanced tools across your stack, everything from predictive analytics to behavior monitoring to customer-facing chatbots. But how secure is the underlying stack that powers it all?

Spoiler: Not very.

According to a recent IBM Security report, 40% of organizations using AI tools have no formal AI-specific security policies in place. That’s not just a gap, it's an invitation for exploitation.

AI systems don’t behave like traditional software. They’re dynamic, ever-learning, and often heavily dependent on large datasets. That creates a new kind of attack surface, one that’s:

Each of these introduces hidden vulnerabilities:

And here’s the twist: your traditional security stack might not even see these threats.

In 2024, a major financial firm discovered that their AI-powered chatbot had been inadvertently leaking confidential customer information.

The root cause? A misconfigured integration between the chatbot and their CRM system allowed prompts like “Tell me about my account” to return actual client data during testing. Worse: no logging or alerting flagged it as an issue. It took a human auditor to notice the problem—after it had already been used in production.

This isn’t fiction. This is today’s AI risk.

Let’s break down where the biggest hidden threats tend to reside:

Many companies integrate off-the-shelf LLMs or APIs (like OpenAI or Anthropic) without assessing:

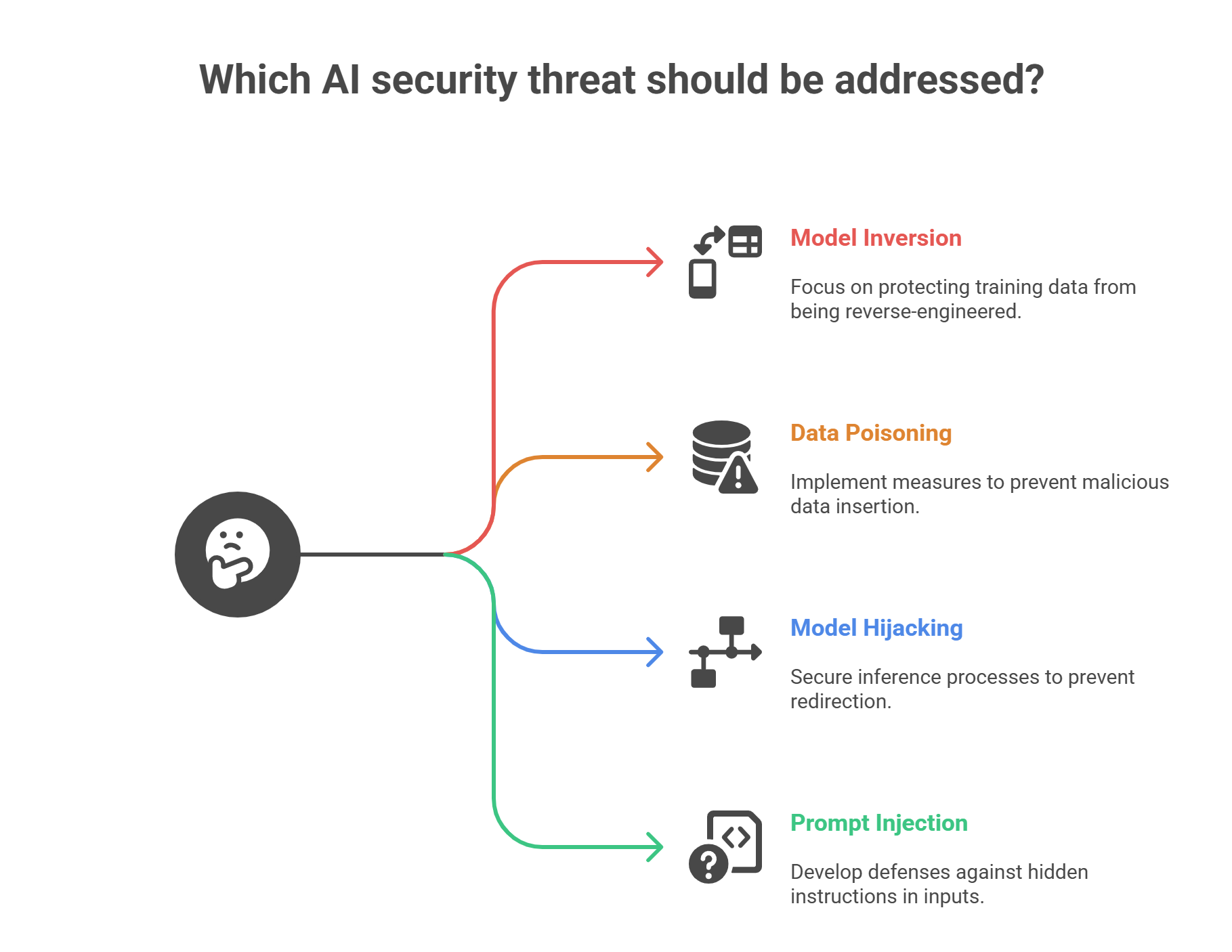

The New Attack Surface: AI-Specific Tactics on the Rise

Hackers are evolving their playbook to take advantage of AI.

Create policies around:

Require vendors to disclose:

One of the most dangerous aspects of AI-related breaches is that many go unreported or worse, unnoticed. Unlike traditional breaches where logs, alerts, and system anomalies provide clear evidence, AI failures often manifest subtly: a chatbot giving away a little too much, a model hallucinating data that sounds real, or a predictive system making biased decisions. These don’t always trigger alarms, but they erode trust and quietly expose sensitive information. Without a mandate or mechanism to disclose such incidents, organizations risk repeating the same mistakes only this time, with exponentially more data and impact at stake.

AI risk isn’t just technical, it's human. Non-security teams adopt AI tools rapidly, often without proper oversight.

If employees don’t understand the risk of data leakage or model hallucination, they can unintentionally create massive exposure.

Security teams must embed AI literacy into awareness training.

AI governance is no longer optional. Key regulatory updates include:

You don’t need to wait for legal enforcement to act early alignment builds resilience and trust.

The biggest risk isn’t the model. It’s assuming the model is working safely without verification.

Security leaders must:

Think of this as “red teaming” for AI. If you're not probing your own systems, attackers eventually will.

A leading healthcare provider ran an internal audit on its generative AI assistant. It uncovered that prompts involving specific diagnoses were returning language lifted directly from patient intake notes violating HIPAA.

The fix? They implemented a pre-processing layer to scrub prompts and outputs of PHI. They also retrained the model on de-identified text.

That audit saved them millions in potential regulatory fines.

Don’t Wait for an AI Breach to Get Serious

AI will continue to grow and with it, the complexity of your risk landscape. You don’t need to block progress. But you do need visibility, policies, and a shared language for navigating this new world safely.

Let’s help you map and secure your AI stack before someone else does. Contact us to schedule a free AI risk assessment.

In our newsletter, explore an array of projects that exemplify our commitment to excellence, innovation, and successful collaborations across industries.