Artificial intelligence is no longer a future-state capability; it is now deeply embedded in the systems that run finance, security, HR, supply chain, risk, and decision-making across digital enterprises. But as AI automates controls, analyzes risk, and influences business outcomes, one question is rising fast on every boardroom agenda:

The convergence of AI, audit, and enterprise governance has created a new era where traditional assurance models are no longer sufficient. Manual evidence, annual controls testing, and compliance-by-checklist have no place in a world where machine-driven actions can happen in milliseconds, across platforms, with global business consequences.

This blog explores how AI is reshaping the role of audit, what accountability means in an automated world, and how modern governance must evolve to stay secure, compliant, and trusted.

For decades, governance and audit programs were built around predictable human activity, manual controls, and documented evidence trails. But AI has completely redefined that model. Instead of controls being executed manually, they are now carried out by algorithms. Instead of screenshots, logs, and sign-offs, evidence now exists as real-time data streams and machine-generated records. Accountability, once tied to a single process owner, is now shared between human oversight and autonomous systems. In this new reality, if an AI model approves financial transactions, adjusts inventory, or grants system access based on behavioral patterns, auditors must validate not only the outcome, but the decision logic behind it how the algorithm made the choice, what data it relied on, and whether that logic is compliant, ethical, and explainable.

This is governance beyond controls it’s governance of logic, data integrity, algorithmic bias, model drift, and digital trust.

In traditional audits, a control failure could be traced to a person, a policy, or a system error. But when AI is the control, accountability has layers:

Without clear ownership and governance, AI becomes a black box, and black boxes are unacceptable in regulated environments especially those involving finance, identity, security, and compliance.

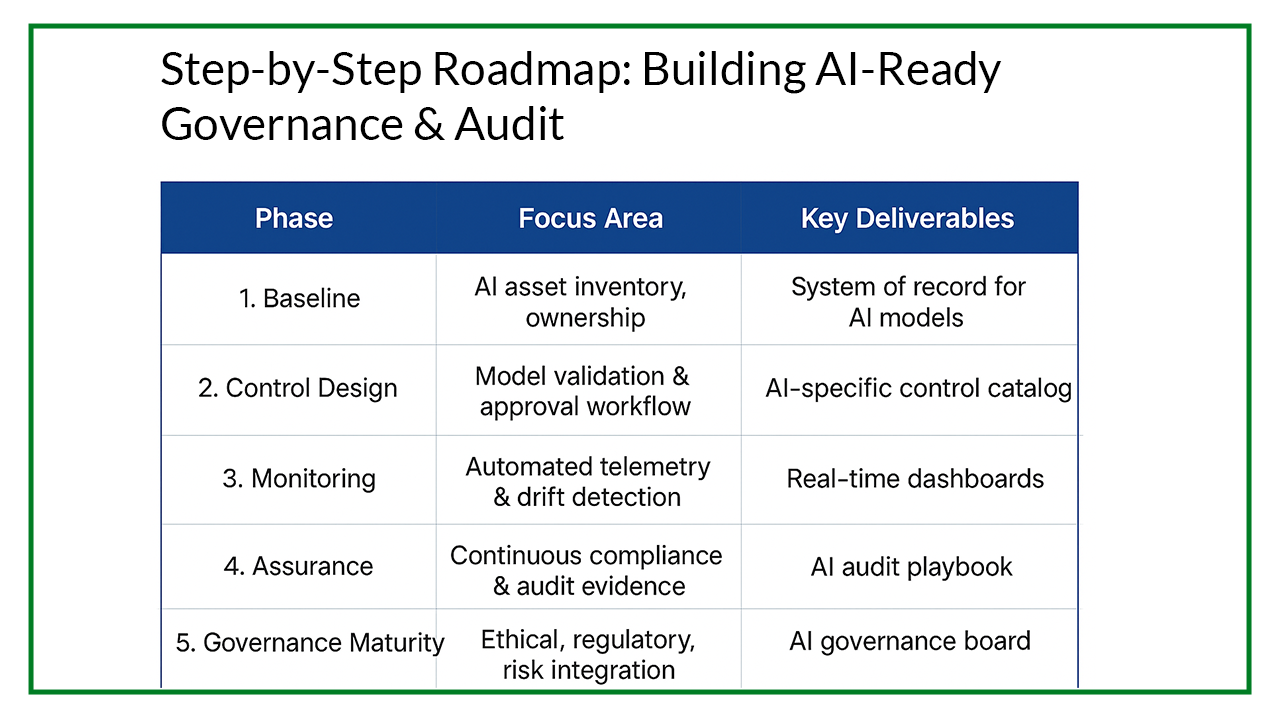

To maintain trust and accountability, organizations must evolve their governance operating model. The modern framework is built on five pillars:

Auditors must be able to answer:

AI outcomes are only as reliable as the data feeding them.

Controls must validate:

Just like access controls and financial controls, AI models must undergo:

Organizations need a risk register for AI just like they do for vendors, systems, and identities.

Upcoming regulations (EU AI Act, ISO 42001, NIST AI RMF) demand clear accountability for AI-driven decisions.

AI is not just something auditors must audit it is reshaping the audit process itself.

Audit 1.0 → Manual Checklist Reviews

Audit 2.0 → Digitized, Control Testing Tools

Audit 3.0 → Continuous Auditing + Automated Evidence

Audit 4.0 → Predictive + AI-Assisted Assurance

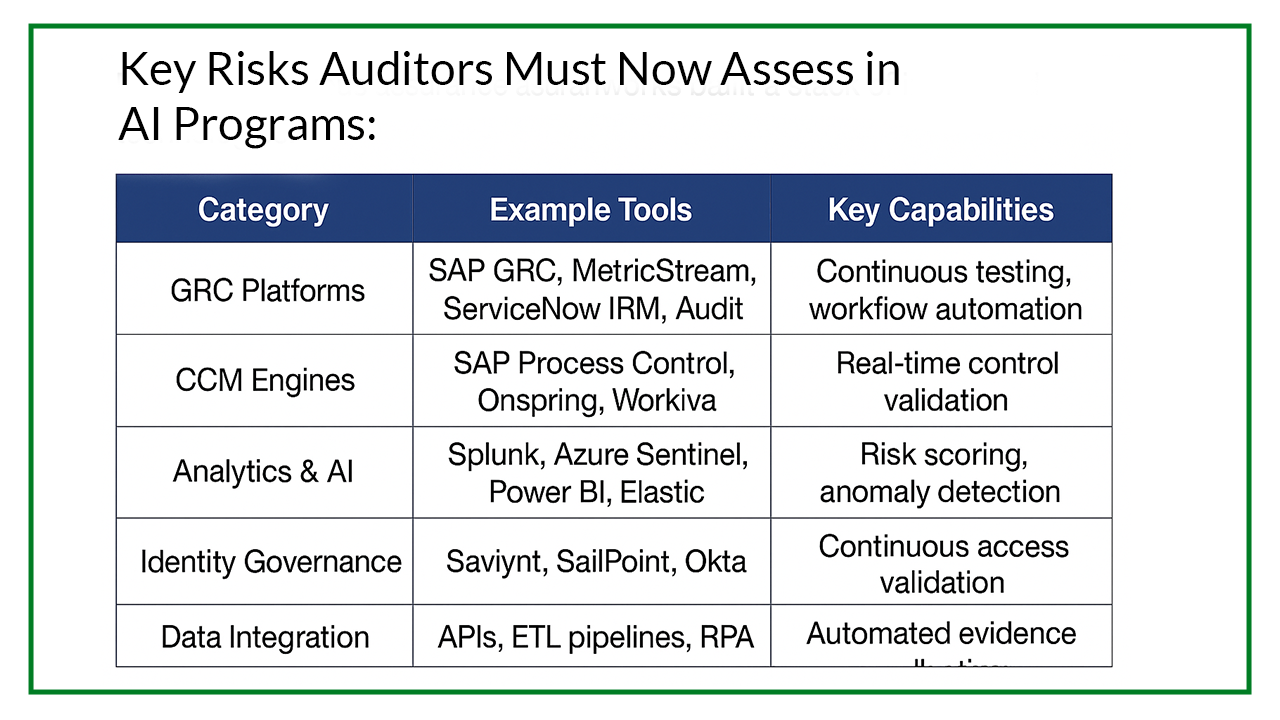

AI-Enabled Audit Capabilities:

The new internal auditor is part data analyst, part technologist, part governance architect.

A Fortune 100 enterprise deployed an AI-based continuous monitoring system to approve journal entries and detect fraudulent spend patterns.

Result:

However the system was flagged when auditors discovered that model drift altered approval thresholds without change approval, triggering a governance redesign.

Lesson: AI increases efficiency, but accountability can never be automated.

At TechRisk Partners (TRPGLOBAL), we help organizations build AI-ready governance, audit, and accountability frameworks from algorithm oversight to automated assurance. If your auditors can’t explain your AI decisions, your enterprise isn’t compliant and soon, it won’t be trusted either.

Want a roadmap for AI governance maturity? Schedule a strategy session

In our newsletter, explore an array of projects that exemplify our commitment to excellence, innovation, and successful collaborations across industries.